Connecting to Microsoft Foundry

Azure AI connection enables Flow actions to interact with Microsoft Foundry resources, such as Large Language Models (LLMs).

Properties

| Name | Description |

|---|---|

| Name | Name of the connection. |

| API Key | The API key used for authentication. |

| Endpoint | The endpoint URL for the Microsoft Foundry resource. |

Creating a new connection

To add an Azure AI action, select an existing Azure AI connection or create a new one.

We'll walk through creating a new connection to a model deployed in Microsoft Foundry.

- In the Flowchart, click to select the action that you want to create a connection for.

- Select Connection in the property panel.

- Toggle Create New Connection on.

- Fill in the required fields:

- Name: Enter a unique name for this connection. Choose a name that makes it easy to understand what the connection is for.

- API Key: Provide the API key associated with the deployed model.

- Endpoint: Enter the full URL of the deployed model (it should look something like this:

https://MY-PROJECT.openai.azure.com/openai/v1).

To find the API Key and Endpoint, go the the Microsoft Foundry portal and do the following:

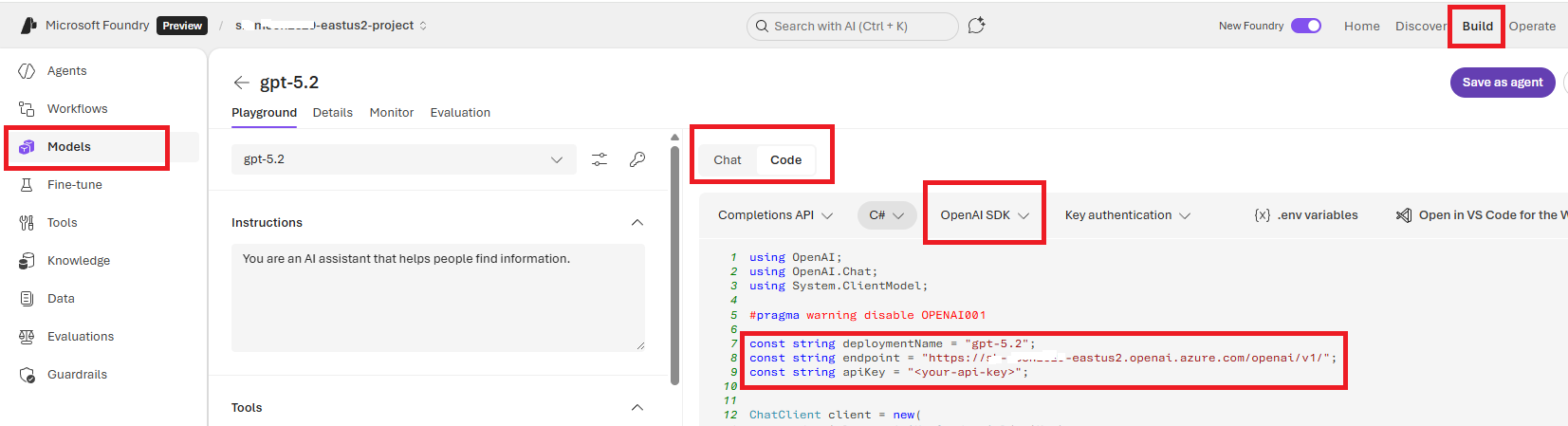

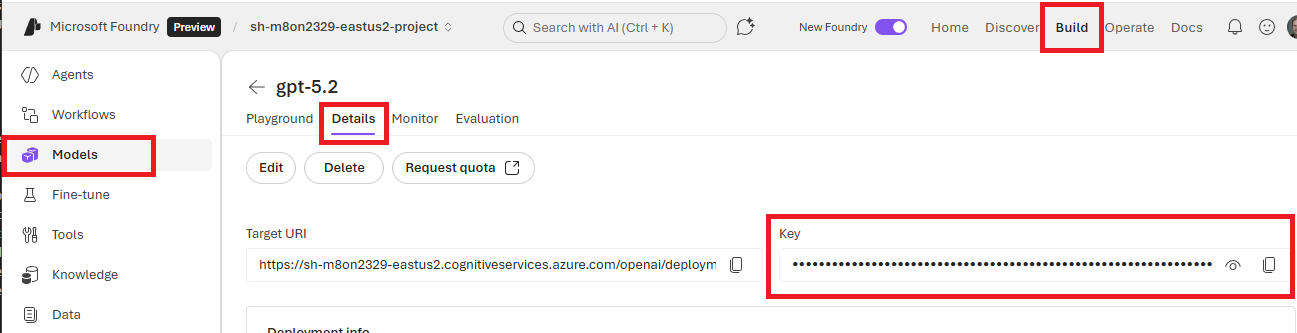

- In the application top bar, to to

Build(upper right corner) - Select

Modelsfrom the left menu - Select the deployed model

- In the

Playgroundtab, switch fromChattoCodeview, and selectOpenAI SDKfrom the SDKs dropdown. - Copy the Endpoint URL

- In the

Detailstab, copy theKey(which is the API key)

Flow 1.11 (December 2025) and earlier

The following documentation applies to Flow 1.11 (December 2025) and earlier

Creating a New Connection

To add an Azure AI action, select an existing Azure AI connection or create a new one.

Important

Whether you want to use an OpenAI or Foundry model, you need to create the connections differently. See details below.

Create a connection to an Azure Foundry model

If you want to use a Foundry model, you can reuse the connection against multiple model deployments.

- In the Flowchart, click to select the action that you want to create a connection for.

- Select Connection in the property panel.

- Toggle Create New Connection on.

- Fill in the required fields:

- Name: Enter a unique name for this connection. Choose a name that makes it easy to understand what the connection is for.

- API Key: Provide the API key associated with the deployed model.

- Endpoint: Enter the full URL of the deployed model (e.g.,

https://xx-m8on1111-eastus2.services.ai.azure.com/models).

To find the API Key and Endpoint, go the the Microsoft Foundry portal and do the following:

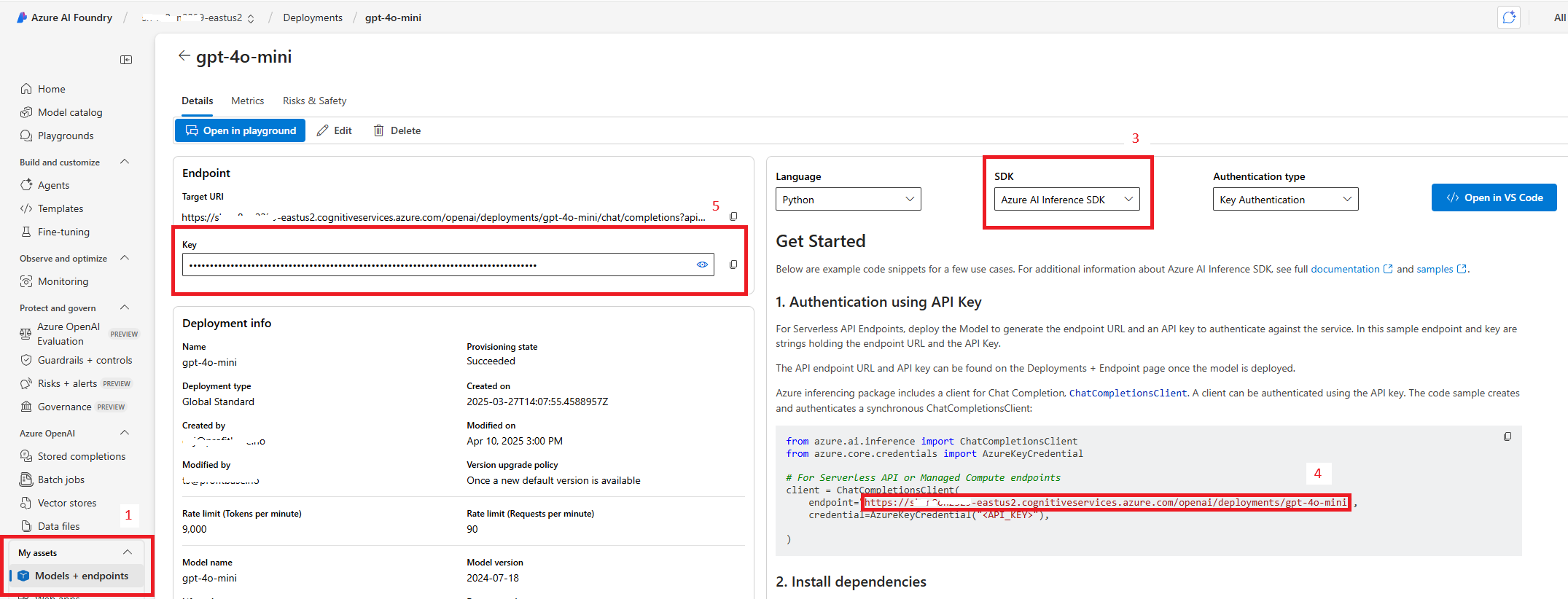

- Click

Models + Endpoints - Select the deployed model

- In the SDK dropdown, select

Azure AI Inference SDK. - Copy the Endpoint URL

- Copy the (API) Key

Create a connection to an Azure OpenAI model

If you want to use an OpenAI model, you must create one connection PR model deployment, because the deployment name is part of the Endpoint.

- In the Flowchart, click to select the action that you want to create a connection for.

- Select Connection in the property panel.

- Toggle Create New Connection on.

- Fill in the required fields:

- Name: Enter a unique name for this connection. Choose a name that makes it easy to understand what the connection is for.

- API Key: Provide the API key associated with the deployed model.

- Endpoint: Enter the full URL of the deployed model (e.g.,

https://xx-m8on1111-eastus2.cognitiveservices.azure.com/openai/deployments/gpt-4o-mini). Note that the Endpoint contains the deployment name (gpt-4o-mini).

To find the API Key and Endpoint, go the the Microsoft Foundry portal and do the following:

- Click

Models + Endpoints - Select the deployed model

- In the SDK dropdown, select

Azure AI Inference SDK. - Copy the Endpoint URL. Note that the deployment name is in the URL.

- Copy the (API) Key

Dynamic Connections

A Dynamic Connection can override a static connection during Flow execution.

This is useful for scenarios where credentials or targets are determined at runtime (e.g., multi-tenant environments).